The re-election of Donald Trump was a national trigger for many Americans. Days after the election, Washington, D.C. Pittsburgh, New York City, Portland, and Seattle saw hundreds to thousands of protesters. LA Times and WaPo subscribers cancelled their subscriptions because the papers did not endorse the Democrat candidate. Feminists shaved their heads and vowed sex strikes. Celebrities promised to leave the country. Some Facebook users changed their profile to solid black in protest to Trump’s win. Even Goodreads members were exhorted to leave the platform because Jeff Bezos (Goodreads and WaPo owner), had the nerve to congratulate Trump.

AP Photo/Ted S. Warren

Another popular protest involved X (formerly Twitter). Elon Musk’s $44 billion purchase of Twitter initially incited lots of reactions. Especially when he began reinstating accounts banned by the previous admin. However, it was Musk’s growing support of Donald Trump and the results of the 2024 election that would be the tipping point.

Shortly after the election, numerous celebrities, academics, news outlets, companies, and even scientists announced their departure from X. Whoopi Goldberg, Elton John, Stephen King, Jamie Lee Curtis, Don Lemon, Jim Carey, Jack White, Bette Midler and many more notified their followers that they would be leaving X. Former Southern New Hampshire University president Paul LeBlanc declared he was leaving X, as well as Isaac Kamola, a political science professor at Trinity College. Even The Guardian pledged to stop posting on the site.

But rather than leaving social media altogether, most of these individuals simply migrated to another site.

Bluesky: A Liberal Echo Chamber?

Over at the American Prospect, Ryan Cooper advised liberals to move to a site “somewhat friendlier to Democrats,” namely Bluesky.

Twitter used to be somewhat friendlier to Democrats, but Elon Musk bought it and turned it into another pit of white supremacist vipers. All Democrats, particularly famous ones, should leave the husk of Twitter at once for Bluesky, by far the most favorable replacement, as Threads is run by another censorial right-wing billionaire.

Interestingly, Threads was the previous “safe space” for Twitter exiles. However, it was quickly supplanted by Bluesky. (In part because, apparently, Threads owner Mark Zuckerberg became “another censorial right-wing billionaire.”)

The Daily Dot describes the social network Bluesky this way:

Bluesky was initially founded by Jack Dorsey in 2019, as an exploration into decentralizing Twitter. Dorsey has since left, and it is now owned and primarily operated by Bluesky Social, PBC.

As a decentralized social network, the network has an open-source framework built by its in-house team. This framework adds further transparency to how the network is built, what’s being developed, and why. A lack of transparency has been among the chief complaints about Twitter in recent years.

Ironically, in early 2024, Jack Dorsey left the Bluesky board and encouraged followers to remain on X. Dorsey feared the trajectory of the current Bluesky ownership would lead to “repeating all the mistakes” initially made on Twitter (“mistakes” reversed by Musk).

Nevertheless, after the re-election of Trump, Bluesky has seen a wave of new users, gaining 1.5 million new accounts, with its traffic surging 500% after the election.

This has left some gushing. Bluesky Is Becoming ‘Blue Heaven’ for ‘The Resistance’ cheered one journalist. But while some are describing Bluesky as “a place of joy,” a “safe space” from hate, misogyny and transphobia, others are seeing it as a growing echo chamber.

At the Financial Times, Jemima Kelly wrote,

That there is a new place for such people to congregate is all well and good, but the problem is that the chatterati — very nice and non-conspiracy-theorising and non-overtly-racist though they may be — tend to coalesce around some quite similar viewpoints, which makes for a rather echoey chamber. I’m not sure I have ever felt more like I’m at a Stoke Newington drinks party than when I’m browsing Bluesky (including when tucking into Perelló olives and truffle-flavoured Torres crisps in actual N16).

An even more fundamental problem is that nobody on Bluesky seems to actually mind that they are in an echo chamber. When I told a friend, who happens to be an enthusiastic Bluesky user, what I was writing about this week, she replied “oh yes, but it is an echo chamber, that’s what people like about it, it’s lovely.” (bold, mine)

Kelly is not the only one to see Bluesky as an ideological echo chamber. So does Free Speech Union founder Toby Hunter who termed the site an “echo chamber” and said, “One of the ironies of people on the Left deserting X for Bluesky is that they are often the very same people who complain about how politics has become ‘too polarised’.”

Nevertheless, many Bluesky users are reveling in their newfound “echo chamber.” Shaun writes, “an echo chamber for the left sounds like a nice idea.” Another says, “It’s not an Echo Chamber it’s a No Assholes Chamber.” Still another, “‘you don’t want to hear conservative voices, you just want an echo chamber’ well yes.”

Apparently, creating a polarized, ideological silo is the attraction of some to Bluesky.

Moderation Tools for an Echo Chamber

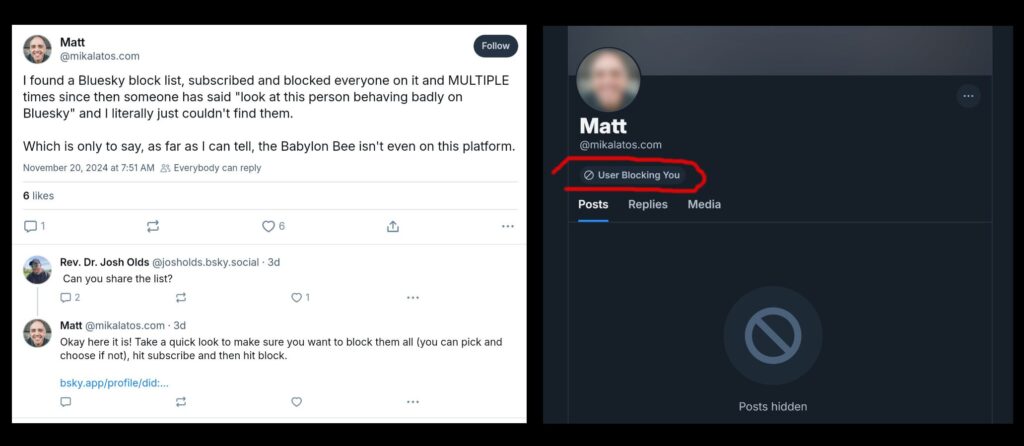

One of the features Bluesky offers to assist users in moderating their feed is what some have nicknamed the ‘nuclear block,’ which prevents all interaction with blocked accounts. Bluesky, like Threads, makes its blocks public. It features a widely used “shared blocklists” tool that allows people to make lists of users they deem toxic (or racist, xenophobic, transphobic, pro-Nazi, or just MAGA cockroaches). Others can subscribe to those lists and auto-block or mute anyone on them.

Apparently, after minimal time on Bluesky, and some rather uncontroversial posts, I found myself on one such blocklist. The reasons for my inclusion are not articulated.

It appears that blocklists were one of the “moderation tools” requested by users at Bluesky. As a board member, Jack Dorsey had feared this kind of thing. In an interview with Pirate Radio, Dorsey explained:

Over at Bluesky, “little by little, they [the users] started asking Jay [Graber, the CEO] and the team for moderation tools, and to kick people off. And unfortunately they followed through with it,” Dorsey told Pirate Wires.

“That was the second moment I thought, uh, nope,” Dorsey added. “This is literally repeating all the mistakes we [Twitter] made as a company. This is not a protocol that’s truly decentralized. It’s another app. It’s another app that’s just kind of following in Twitter’s footsteps, but for a different part of the population.”

Compound this with the fact that Bluesky’s moderation team strictly enforces community guidelines, which forbid promoting “hate or extremist conduct.” Of course, what’s considered “hate or extremist content” is defined in such a way to serve political progressives. For example, Bluesky was initially hailed as “trans friendly,” attracting large groups of LGBTQ+ representatives. As the site was, initially, by invite only, this insured a more insular demographic makeup. But this approach was not without problems. As Wired reported during Bluesky’s start-up,

As people have flocked to Bluesky in recent weeks, the platform has been hit with a problem as old as the internet: nudity. Among those who recently joined the decentralized social network established by Twitter cofounder and former CEO Jack Dorsey are large contingents of the tech, trans, and sex worker communities who have brought their own social norms to the chaotically exciting social platform.

Part of those “social norms” involved nude pictures.

While limiting “the spread of nudity may have been affected in part by the potential future growth of Bluesky,” it was the strict enforcement of content moderation — specifically “hate speech” related to LGBTQ+ issues — that has kept Bluesky a “trans friendly” safe space. For example, according to MSN, many conservative users “face abuse and censorship” at Bluesky. Users identifying as MAGA or Republican were summarily included in blocklists. Others have reported having their account shut down after posting the simple phrase “there are only two genders.”

In this way, “moderation tools” can become a method of censorship. When a platform’s community guidelines tells users not to target “harassment or abuse” at any individual or group “including but not limited to, sexual harassment and gender identity-based harassment,” as Bluesky’s do, then simple, biological facts like “there are only two genders” become “hate speech.”

The Problem with Echo Chambers

But while such content moderation may provide a sense of safety to some, creating social media echo chambers carries inherent problems.

For one, echo chambers can perpetuate emotional fragility.

In their important book, “The Coddling of the American Mind: How Good Intentions and Bad Ideas Are Setting up a Generation for Failure,” Jonathan Haidt and Greg Lukianoff write,

“A culture that allows the concept of ‘safety’ to creep so far that it equates emotional discomfort with physical danger is a culture that encourages people to systematically protect one another from the very experiences embedded in daily life that they need in order to become strong and healthy.”

Indeed, contact with opposing points of view and ideological diversity is a necessary part of maturity and emotional growth. By equating heterodox opinions with actual threats to mental and/or physical health, we not only insulate our beliefs from interrogation, but we sensitize ourselves to numerous perceived micro-aggressions. Thus, the statement “there are only two genders” is only controversial to the individual unwilling to consider the wealth of contrary data, or emotionally beholden to its unquestioned denial. In this way, echo chambers perpetuate emotional fragility.

Echo chambers also perpetuate an inaccurate view of reality.

In his article Echo Chambers and the Ethics of Blocking, Aaron Ross Powell concludes,

…the risk of social media ideological uniformity isn’t the uniformity itself, but that social media leads us into believing that uniformity is representative of the whole. (italics in original)

This is precisely what happened in the 2024 election. Those who were most distressed by the results were those who believed the rhetoric within their own circles. Pollsters have admitted as much, acknowledging that they missed rural voters, conservatives, and young males. Their misguided results were evidence of an echo chamber they’d constructed. When the voices you’ve surrounded yourself with have convinced you that they’re representative of the mainstream, the average, the consensus, the Truth, you are in an echo chamber.

Michelle Quirk, writing in Psychology Today, describes this as “the false consensus effect.”

This cycle is particularly strong in today’s digital world. Social media platforms use algorithms that amplify echo chambers by curating content that aligns with users’ past interactions. As individuals continually engage with similar content, they become increasingly entrenched in their views and less likely to encounter diverse perspectives. These algorithms exacerbate the false consensus effect, fostering a perception that their views are more popular and correct than they are.

Living inside an echo chamber reinforces an inaccurate view of our world and the people in it. The more we shut out opposing points of view, and the people who hold those views, the more detached we become from reality.

Echo chambers reinforce misinformation and confirmation bias.

In her article, Echo chambers: how they’re created and how to avoid them, Story Pennock suggests,

…misinformation thrives in echo chambers. If you only see posts that you agree with, you’re less likely to be critical of false or misleading videos or memes. This is called confirmation bias. When all the posts you see reinforce your point of view, you will not encounter opposing opinions, and you may end up spreading false information.

Curating a social media feed that sifts out certain news stories, articles, and opinions makes us more susceptible to misinformation. Like the folktale, The Emperor’s New Clothes, when we spend our time listening to sycophants, we often won’t recognize our own nakedness (logical and ideological) until too late.

Finally, echo chambers require vigilant content screening, enforcement, and enforcers. In the same way that the human body fights off infection, echo chambers require “antibodies” designed to locate and eliminate contrary ideas or unacceptable conduct. This is often done through moderation teams. While such moderation is absolutely necessary, it can indeed be abused. It is not uncommon to learn of social media users whose posts are blocked or accounts are closed because of rather trivial, questionable infractions. On a larger scale, Mark Zuckerberg eventually confessed that he was wrong to censor massive amounts of COVID-19 content due to pressure from the Biden administration. Point being: Content moderation is a double-edged sword.

Still, the influx of new users has forced Bluesky to quadruple its moderation team. Compounding this is the demographic makeup of the platform. With ideological liberals flocking to the site as a “safe space,” reporting even minor infractions has become status quo. Indeed, the Bluesky Safety team recently posted that it received 42,000 moderation reports in a 24 hour span, versus 360,000 in all of 2023. The mass influx of reports is likely attributable to the expectations of the account holders. In an echo chamber, users become attuned to perceived micro-aggressions and are vigilant to report potential offenders to the hall monitors.

Curating “Diversity” on Social Media

Of course, there is a time for like-mindedness and group homogeneity. Congregating with people who hold similar views can be refreshing and life-giving. Nevertheless, we must always be vigilant to insulating ourselves against differing points of view and tribes. Since most agree that echo chambers can be unhealthy and should be mostly avoided, how can we curate ideological diversity in our social media feed?

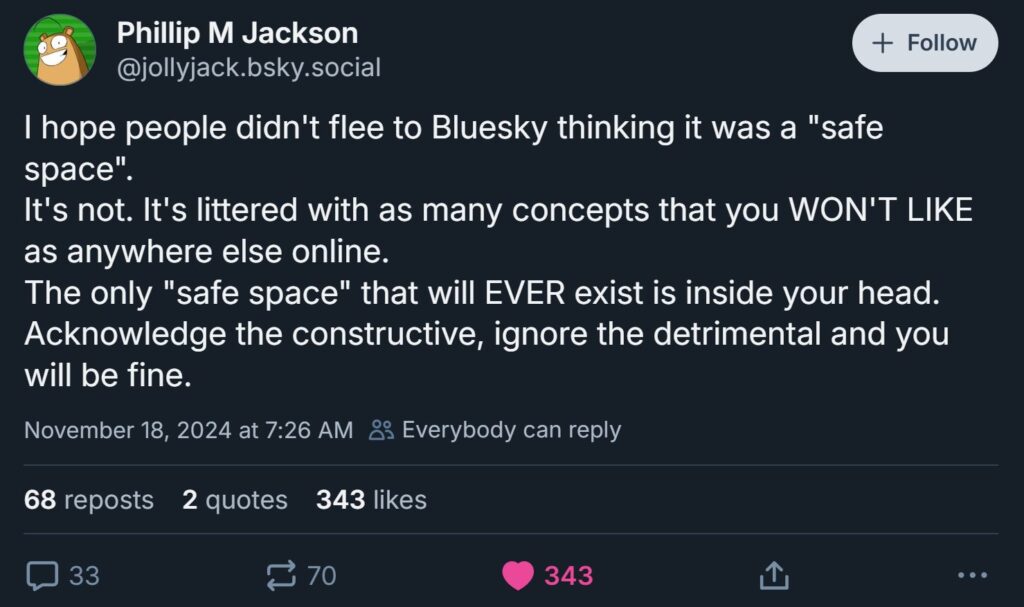

One artist at Bluesky wisely wrote,

I hope people didn’t flee to Bluesky thinking it was a “safe space”. It’s not. It’s littered with as many concepts that you WON’T LIKE as anywhere else online. The only “safe space” that will EVER exist is inside your head. Acknowledge the constructive, ignore the detrimental and you will be fine.

Jackson is correct in asserting that the only real “safe space” is the one between your ears. Cultivating emotional toughness and tolerance for differing points of view is important to healthy online engagement. Of course this doesn’t mean we should tolerate abuse or misconduct. But in most cases, such bad behavior can simply be ignored. Don’t comment on the offending post. Just scroll past. The long-standing internet dictum “Don’t feed the trolls,” is still applicable.

Of course, blocking those who repeatedly demean, defame, threaten, or bully you online is quite valid. While we should be able to tolerate civil discourse, objections, and disagreements, we are under no obligation to endure everyone’s tantrum. Nevertheless, curating your social media circle based on ideological homogeneity can be dangerously cultish.

Thus, it’s important to distinguish between someone actively trolling you, making real threats, and refusing goodwill engagement, and those who simply disagree with and challenge your POV.

Which is why Pennock suggests the obvious,

…diversify the types of news and entertainment sites that you follow to get a diverse range of perspectives. Follow left-leaning and right-leaning sites.

The single best thing we can do to ensure we don’t tumble into an echo chamber is to entertain and engage those outside of our ideological / political / spiritual circle.

In GCF Global’s What is an Echo Chamber, the author explains how “filter bubbles” can actually “prevent you from finding new ideas and perspectives online.”

The Internet also has a unique type of echo chamber called a filter bubble. Filter bubbles are created by algorithms that keep track of what you click on. Websites will then use those algorithms to primarily show you content that’s similar to what you’ve already expressed interest in. This can prevent you from finding new ideas and perspectives online.

Simply put: We can avoid an echo chamber by broadening — not shrinking — our filter bubble.

For this reason, I intentionally strive to keep my social media feed eclectic. Between Facebook, X, Instagram, Bluesky, and Threads, I follow an extremely diverse crowd. Atheists, Christians, religious progressives, Democrats, Republicans, Libertarians, feminists, queers, trans, detransitioners, political pundits, independent journalists, mainstream journalists, mainstream health experts, flat earthers, evolutionists, young earth creationists, and the list goes on. I follow the Satanic Temple and the Church of Satan on X. I follow Stephen King and the NYT. I follow liberal authors whom I disagree with on just about everything. I even follow people whom I consider heretics. Striving to interact with different people and ideas is, in the long run, far more healthy than curating an echo chamber.

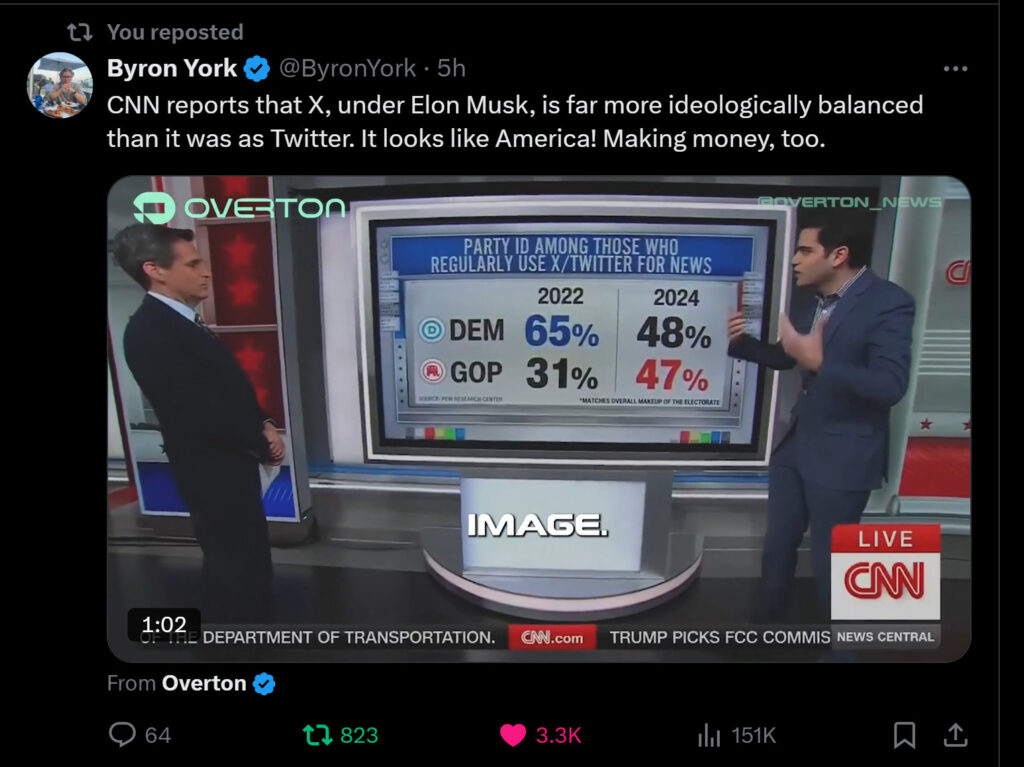

Despite the recent migration from X, the site still far outpaces Bluesky in use. According to Forbes, “Bluesky has about 14.5 million total users, while X said in March about 250 million people use the social platform every day.” Even more interesting, shortly after the great Xodus to Bluesky, CNN reported that under Elon Musk, X is far more ideologically balanced than it was as Twitter.

Indeed, such political balance should be hailed as a model for healthy discourse, not a reason to split.

That is, unless ideological uniformity is one’s goal.

Anyone is susceptible to falling into an echo chamber; liberal, conservative, or somewhere in between. Demonizing entire groups of people and forcing them outside our bubble, may make us feel “safe,” and intellectually superior. But in the long run, it creates a “false consensus effect,” making us ever more emotionally fragile and out of touch with reality. Of course, curating our social media feed to reflect our unique interests and values is understandably permissible. The problem is when that curation become an extension of small mindedness, bigotry, and fear.

An even better solution is to leave social media entirely. I drastically stepped back from all of it in 2020 and found my mental health improved dramatically. I’ve since deleted my facebook account and have no interest in going back to any others. I’ve been blogging again and connecting with bloggers, and let me tell you, long-form content is just the best. Someone has a viewpoint I disagree with? They have 5000+ words to articulate their viewpoint and reasoning, leaving me much more open to conversation. I’ve been rubbing shoulders with all kinds of people I would avoid in other places, because in long-form, their views are fascinating. It’s also been nice to reconnect with people with very traditional beliefs in God, family, and the heroic ideal. I haven’t found many of those even in Realm Makers.

Good for you, Kessie! I’ve actually wondered at where you’ve been, not having seen you on FB. Great to know you’re doing well.

I agree with Kessie up there ^

Not on social media anymore, just have the blog. I don’t schmooze much with other blog-focused types of writers, just because they are not as easily connected as they would be on social media. The longer form content of blogs generally selects for more thoughtful reading and responses. When you are limited like X/Twitter is/was, the only way to generate the most discussion is through rhetoric and polemic. It’s not any wonder people of a certain kind would flee from that.